#35 - When your rules look great but performance tanks

Rules are integral to any fraud prevention system that operates at scale. Every system relies heavily on rules, even if we don’t think so:

When using AI, we use rules to transform scores into decisions.

When doing manual investigations, we use rules to flag high-risk events.

I have written before about how to write high-performing rules, how to use AI for rule recommendations, and even on how to write rules with AI for free.

But how do you know if a rule is performing well or not?

Today I’d like to explore rules–and fraud prevention systems in general–from the perspective of performance. How do we measure our rules in the wild?

Rules are part of a system and should be measured as such

Let’s start with the basics. Which dimensions do we even want to assess?

I’ve seen many different KPIs being used by different teams, but in the end they all try to answer two questions:

Coverage: how much fraud will I block?

Accuracy: how much good business will I block?

When it comes to coverage, many teams use “recall” as a standard data science term.

The way you calculate recall is very simple:

Let’s say I have a box of 100 apples where 10 of them are rotten. If I manage to spot 5 rotten apples, then my recall is 50%.

The problem with using recall is that it doesn’t allow you to calculate the incremental benefit of separate solutions. Let me explain:

In the apples example above, let's imagine I’m not working alone. I have a friend who is also trying to spot bad apples, and they manage to spot the other 5 bad apples.

Individually, we both had 50% recall each, but together we caught 10/10 rotten apples or 100% recall.

But what if we both spotted exactly the same bad apples? Individually we had the same 50% recall but together it remains 50% as well.

While my recall didn’t change, my impact isn’t the same in both cases.

So we see why recall isn’t the best measure to help us understand how we impact the overall system with a single change.

So what then?

A better approach would be to measure FNR, or False Negative Rate. Basically, how many fraud cases have managed to go undetected within the entire population.

Firstly, because it bakes-in the incremental calculation.

Secondly, using FNR can also be very relevant to regulated Fintechs that need to stay within certain thresholds. In certain aspects, it’s very similar to how you calculate chargeback rates.

Let’s take the same example:

If me and my friend caught together all the bad apples, our FNR would be 0%. But if each of us spotted exactly the same bad 5 apples, our FNR would be 50%, because 5 bad apples went undetected.

In that case, my work has no incremental value and that’s exactly what we want to spot.

Side note: If I can, I try to also complement FNR with clear value figures. “This rule would save an average of $5,000 a month” tells me exactly what I need to know.

False Positive Rate is a common but misleading KPI

Okay, what about accuracy?

If we use FNR already, we should use FPR, right?

FPR, or False Positive Rate, tells you how many good events you blocked out of the entire good population.

Let’s say we wrongly spotted 5 apples as bad ones as well. Then our FPR = 5 / 90 (100 apples - 10 rotten ones), or 5.55%.

The reason why I don’t like using FPR is that it doesn’t really say anything about the quality of our solution. As long as I wrongly tag 5 good apples as bad, it doesn’t matter if I spot all 10 rotten ones or none of them. My FPR would stay the same.

A much better metric to use is Precision: the number of fraud events in the population I catch. For example:

If I catch 10 bad apples and 5 good ones, my precision is 10/(10+5) = 66%

If I catch 1 bad apple and 5 good ones, my precision is 1/(1+5) = 16.6%

Both would have the same FPR, but you can now see clearly which one is better.

Measuring it incrementally can change the whole picture

When it comes to precision, we still need to be mindful of incrementality.

Let's say my friend spotted 1 bad apple and 5 good ones with a precision of 16.6%.

If I do the same, what will be my incremental precision?

Well, it depends.

Let’s say 3 of the good apples I caught were already part of the 5 my friend caught.

Then my incremental performance would be: 1/(1+5-3) = 33%.

Why? Because I catch 1 bad apple for the price of only 2 “new” good apples.

Even though separately we have the same performance, if my work is seen as a new addition, my precision is actually twice as good!

And if I catch the same bad apple as he did? Then I only create new false positives…

That’s why we should always measure incremental benefit over the rest of the system.

When fraud systems fail despite having great rules

Okay, enough with the apples already! What makes a good rule?

The primary metric I use to judge that is precision. If the incremental precision is high enough (25-50% in most cases), then it’s most likely a rule we should activate.

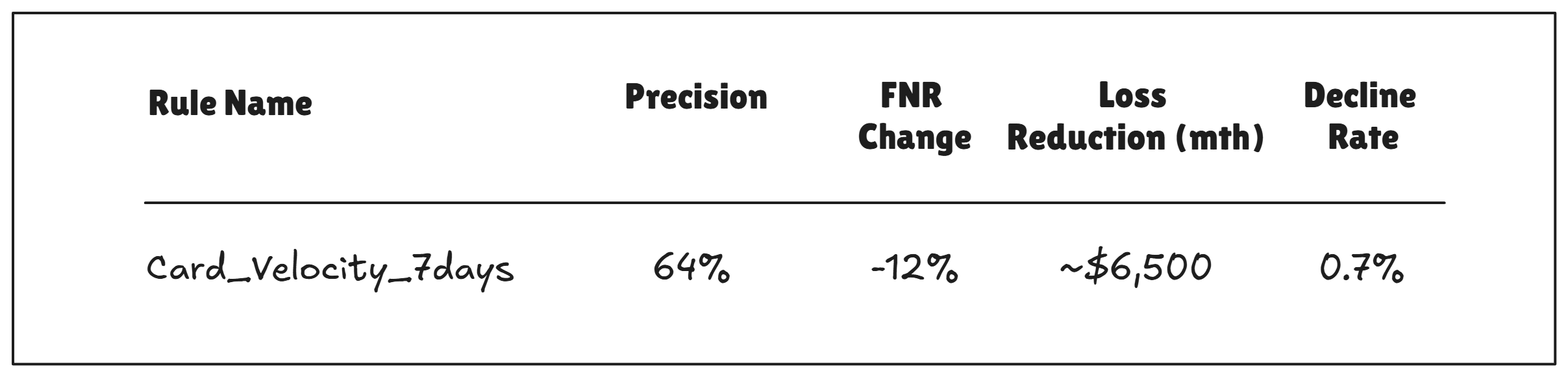

On top of that, I’d like to review some other metrics before I approve it, so I get to understand the overall effect we’re expecting to see. These include: effect on FNR, Value (when applicable), and decline rate.

Side note: decline rate represents the overall declines we’re expecting to see, both fraud and non-fraud. It helps us to quickly validate our expectations when we go live without the need to detect false positives.

Here’s how a basic rule approval request would look like (all incremental numbers):

This rule will catch ~2 fraud cases for each single false positive. It’ll reduce fraud losses by 12% (~$6,500 a month), and will decline about 0.7% of the total flow.

Clear. Easy. Approved.

Why is this so important?

Every day I meet businesses that cannot comprehend how their system is breaking down while their rules look good on paper.

The answer is simple: they measure their rules as standalones. But looking closely, a lot of these rules catch the same fraud trends, and just keep piling up the false positives!

Sometimes it’s not about more data, better tools, or fancy AI.

Sometimes it’s just about how you build your Excel sheet.

How do you measure and approve rules for live release? Hit the reply button and let me know!

In the meantime, that’s all for this week.

See you next Saturday.

P.S. If you feel like you're running out of time and need some expert advice with getting your fraud strategy on track, here's how I can help you:

Free Discovery Call - Unsure where to start or have a specific need? Schedule a 15-min call with me to assess if and how I can be of value.

Schedule a Discovery Call Now »

Consultation Call - Need expert advice on fraud? Meet with me for a 1-hour consultation call to gain the clarity you need. Guaranteed.

Book a Consultation Call Now »

Fraud Strategy Action Plan - Is your Fintech struggling with balancing fraud prevention and growth? Are you thinking about adding new fraud vendors or even offering your own fraud product? Sign up for this 2-week program to get your tailored, high-ROI fraud strategy action plan so that you know exactly what to do next.

Sign-up Now »

Enjoyed this and want to read more? Sign up to my newsletter to get fresh, practical insights weekly!

![Native[risk]](http://images.squarespace-cdn.com/content/v1/65704856a75e450a6d17d647/f3aa0ca7-8a4d-4034-992f-9ae514f1127e/Native+risk_logo-invert.png?format=1500w)