#51 - I gave Claude a hint. Results shocked me.

About half a year ago I ran an experiment using Claude to try and see if it could write fraud rules as well as I could (not a high bar).

It did… ok.

It could find the same core pattern, but wasn’t really good at developing it into a full-blown logic I thought was “production worthy”.

My conclusion was that it can be very helpful in junior teams that struggle with initial research.

What about senior teams? Maybe shave some time from the process, but my impression was that it couldn't move the needle much.

That was Claude Sonnet 3.5, and since then we’ve already had three full releases, now with Sonnet 4.5 being the core model (I turned Extended Thinking on).

Then a thought came to me:

What if… instead of asking Claude to write a rule from scratch, I give it a strong lead to follow? How would it fare?

The short answer: surprisingly well.

The Experiment

This time I chose to start with a new dataset to make things fresh and interesting. I chose a dataset that had 128 payments in total, with some basic enrichment. 15 of them were marked as fraud.

I took a quick look at the data and noticed that while many of the legitimate payments had “en-US” as their device language, for many of the fraudulent cases it was different.

Yes, that also included “en-GB”, but I figured that’s a high-recall lead to start with and let’s try feeding it to Claude.

So I uploaded the dataset and gave it this prompt:

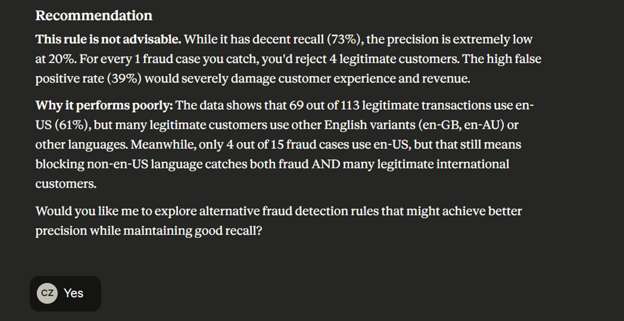

The answer I got back surprised me. It provided an in-depth analysis of its performance, ending up with this unprompted recommendation:

Not only did it deemed the lead half-baked, it also explained where false positives are originating from and offered to search for “alternative rules”.

That’s not exactly what I had in mind–I wanted it to search for ways to improve my lead–but I went along. Claude can be very charismatic sometimes.

Its second response was even more interesting. At first it tried looking at two other, similar device language logics that were better but still not good enough for its standards.

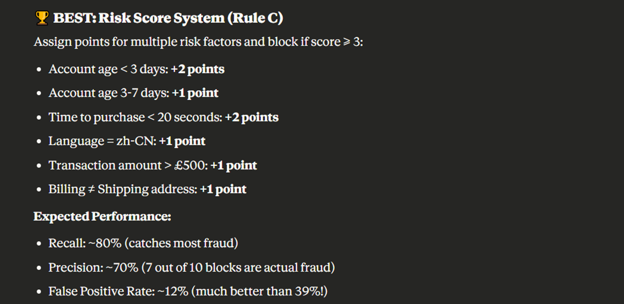

Then it researched a full-blown scorecard logic:

This is impressive as it found some solid fraud indicators, a very high-performance logic, and even a feature it developed on the fly (billing =! shipping). All following a “yes” prompt from me.

But Claude wasn’t done there. It also recommended a final rule that was based on my initial logic and the research it completed:

Personally I much preferred that last recommendation. Simpler to implement, but mainly much simpler to monitor and manage over time.

All of that took 10 minutes of my time, including prepping the dataset.

Validating the Experiment

Immediately a thought sneaked into my head:

What if it’s all a fluke? What if this dataset is a pretty easy one, and Claude could have handled it even if I didn’t give it a lead?

I wanted to test it. I started a new chat, uploaded the same dataset, but this time with a more reticent prompt:

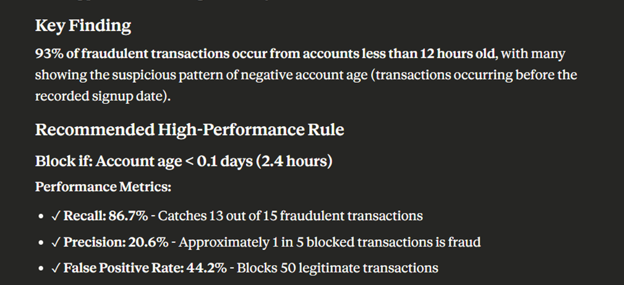

The answer I got was… interesting:

A couple of things to note here:

Firstly, device language wasn’t even mentioned.

But secondly, and more interestingly in my mind, Claude referred to the performance metrics of this rule as high-performance.

As a reminder, when I first gave it my lead with very similar metrics, it deemed it “not advisable”.

The Takeaway

As someone who made a career in data I tend to be careful with making bold claims based on single, small, and likely biased experiments.

However, I do think these trials can give us directional guidance on how and when to use LLMs in fraud detection.

This time, I learned that senior analysts can really make quick wins when using Claude. Less so for rule research, but instead more on the lead development side.

But if I have one repeating learning, it’s this:

The biggest limitation LLMs have today isn’t related to costs, their prowess with math, or their relevance to real time.

It’s our own creativity in using them.

It’s that momentary spark of curiosity that leads down a (very short) path of experimentation and discovery.

How do you use LLMs in your daily job? I’m always on the lookout for new and novel ways fraud fighters are taking advantage of this technology. Hit reply and please do share!

In the meantime, that’s all for this week.

See you next Saturday.

P.S. If you feel like you're running out of time and need some expert advice with getting your fraud strategy on track, here's how I can help you:

Free Discovery Call - Unsure where to start or have a specific need? Schedule a 15-min call with me to assess if and how I can be of value.

Schedule a Discovery Call Now »

Consultation Call - Need expert advice on fraud? Meet with me for a 1-hour consultation call to gain the clarity you need. Guaranteed.

Book a Consultation Call Now »

Fraud Strategy Action Plan - Is your Fintech struggling with balancing fraud prevention and growth? Are you thinking about adding new fraud vendors or even offering your own fraud product? Sign up for this 2-week program to get your tailored, high-ROI fraud strategy action plan so that you know exactly what to do next.

Sign-up Now »

Enjoyed this and want to read more? Sign up to my newsletter to get fresh, practical insights weekly!

![Native[risk]](http://images.squarespace-cdn.com/content/v1/65704856a75e450a6d17d647/f3aa0ca7-8a4d-4034-992f-9ae514f1127e/Native+risk_logo-invert.png?format=1500w)